This post is Part 2 of the series. Part1, which explains Programs and Processes, can be found here.

Thread

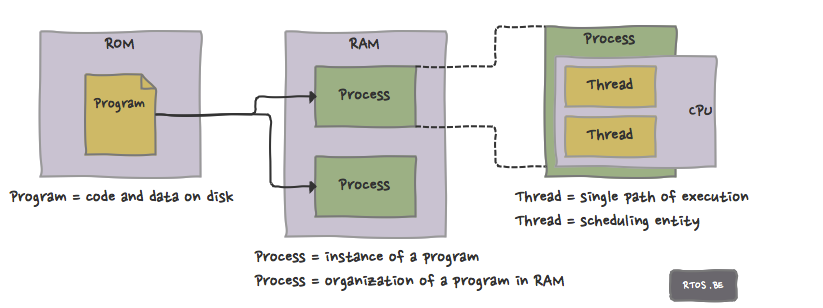

A thread is a single path of execution and schedulable by the CPU. It has its own stack, program counter and set of registers.

A process (living program and container of all resources — see Part1) has one or more threads, and threads of the same process can share but also corrupt each others data because they run in the same address space. For applications in which data integrity and robustness is important, software architects therefor sometimes prefer several ‘lean’ processes with a low number of threads above one ‘big fat’ process with many threads. Another benefit of this software architecture is better software maintenance because of improved testability (processes are typically easier to test than threads) and ease of debugging (e.g. interprocess communication is more explicit because it has to cross the process boundary, it is very clear which process has crashed etc.). A disadvantage is run-time communication overhead although this is system dependent as can be read from this quote [ref]: “In fact, if you are on a multi-processor system, not sharing may actually be beneficial to performance: if each task is running on a different processor, synchronizing shared memory is expensive.”. Of course, most of the time, “information sharing” is necessary but you might want to think hard what needs to be shared and what not, and limit the amount of common data structures and the frequency of sharing them…

Functions that access data – which is shared among threads – should be re-entrant in order to avoid race conditions and non-deterministic results.

Threads are not always executing. They can be blocked (waiting for an event) or scheduled for executing. This brings us to three typical thread states:

- ready: ready to run,

- executing: running, and

- blocked: waiting for an event e.g. a resource (and thus not ready to run).

But many more states are possible as defined by the operating system. Also, semantics of states might differ (e.g. difference between blocked, suspended, pending etc.).

It is the responsibility of the OS scheduler to decide which thread(s) get the CPU(s), and for how long. Depending on scheduler class, threads can be assigned priorities. Thread priorities are a fundamental property of real-time operating systems (please check out our real-time series).

Opposed to a process:

A thread = cpu- or ‘time’-related concept!

Example 1: Linux

The Linux kernel makes no distinction between processes and threads i.e. they are both represented by the same struct task_struct. Task_structs that share memory could be called threads (only in user space the difference is made explicit).

A task_struct contains a lot of scheduler-related fields such as:

- scheduler info: sched_class, sched_entity, sched_rt_entity,

- several priority-related fields: prio, static_prio, normal_prio and rt_priority,

- state information: state (unrunnable, runnable, stopped) but also exit_state.

- …

The Linux task_struct is quite complex which is a natural consequence of the many possibilities.

Example 2: Integrity RTOS

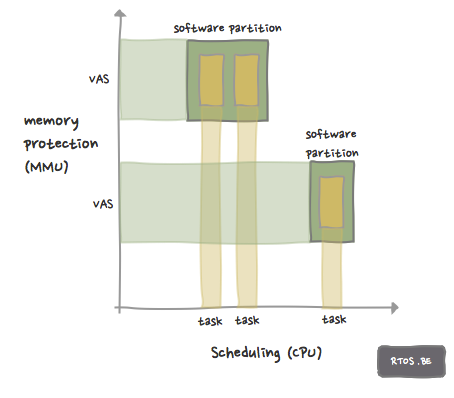

Integrity has Tasks as schedulable entities and they represent the second dimension of a software partition (a VAS being the first dimension). Tasks belong to a Virtual Address Space (VAS) and have priorities: tasks with high priority can preempt lower priority tasks. The Figure depicts an overview of all concepts.

Integrity provides the Enhanced Partition Scheduler to enable strict time allocation for different software partitions. E.g. 75% of the CPU time could be allocated to the first software partition (that has 2 tasks) while the second partition only gets 25%.

In combination with memory protection, the Enhanced Partition Scheduler allows for strict separation in ‘time’ and ‘space’ between different software partitions. This is particularly important when a single controller houses a safety-critical and a non-safety-critical application. Both applications can then run independently.

Example 3: FreeRTOS

FreeRTOS supports preemptable, prioritized tasks. Task states are shown and listed here. More details on task priorities can be found here. Interesting to mention is the Idle Task which ensures that there is always a task which is able to run. The Idle Task can be used to do idle-priority (background) work.

Next to tasks, FreeRTOS also employs co-routines. Co-routines share the same stack and use prioritized cooperative scheduling within the co-routine pool but they can be preempted by a task. The concept of co-routines is similar to the concept of green threads, ligh-weight processes and coroutines. See also GNU Portable Threads or… learn Erlang (e.g. by reading the book ‘Programming Erlang, Software for a Concurrent World‘ from Joe Armstrong).

Speak Your Mind